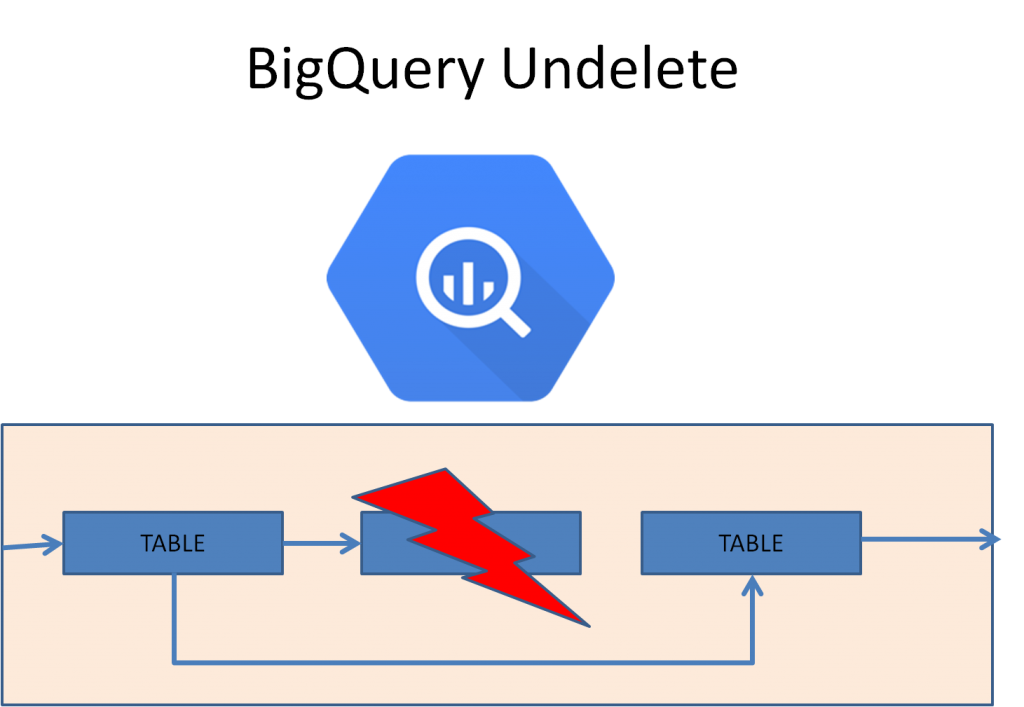

Disaster Strikes!

All hell broke loose when a developer deleted a table in production in BigQuery and we had to fight the fire early in the morning. The table was getting continuously populated through streaming data from pubsub. Since pubsub handles backlog, there was no fear of losing new data but what about the old data? The table already had billions of records. How do we get the data back? Do we need to get it from a backup of previous day?

In this blog, I talk about how we recovered the table, without losing any data by restoring the table to the point of deletion, though the Google documentation didn’t fully help in this.

Some Relief

We found this useful information from the Google documentation that talks about undelete of a table by getting a copy of the deleted table as of certain timestamp.

https://cloud.google.com/bigquery/docs/managing-tables#bq_2

You can undelete a table within seven days of deletion, including explicit deletions and implicit deletions due to table expiration. After seven days, it is not possible to undelete a table using any method, including opening a support ticket.

So we had a sigh of relief that we can recover the table without restoring from the backup. The documentation gives a method like below to recover the table using the @<time> snapshot decorator in the bq command-line tool:

bq cp mydataset.table1@1606281203000 mydataset.table1copyHere the time provided is the Unix time stamp in milliseconds when the table existed. You can get the seconds in Unix timestamp using the command like below:

date '+%s' --date='2020-11-25T05:13:23.106650677Z'You can add 000 at the end to convert it to milliseconds. You can also use a relative time stamp like below to get the table as of 1 hour back:

bq cp mydataset.table1@-3600000 mydataset.table1copyWe use 3600 seconds in an hour multiplied by 1000 to get the milliseconds. Now don’t ask me if Google maintains the table snapshots at milliseconds precision or not.

But the documentation does not talk about how to get the latest copy of the data as of the time of deletion. There is a command like :

bq cp mydataset.table1@0 mydataset.table1copyto get he oldest backup. But how about the latest backup of the deleted table?

Audit Log to the rescue!

We remembered Google maintains audit logs, and all delete operations are logged. And these audit logs are enabled by default and are maintained by Google for 6 months. We needed only last 7 days as BigQuery deleted table data is maintained for only 7 days. As a crude method, we dumped all the logging data using the below command:

PROJECT=`gcloud config get-value project`

gcloud logging read --project=$PROJECT > logsinto a file file and checked the file to get the table deletion time. Later we fine tuned the commands to save as part of disaster recovery recipe:

PROJECT=`gcloud config get-value project`

gcloud logging read "resource.type=bigquery_dataset AND logName=projects/${PROJECT}/logs/cloudaudit.googleapis.com%2Factivity AND protoPayload.serviceName: bigquery.googleapis.com AND protoPayload.methodName: google.cloud.bigquery.v2.TableService.DeleteTable" --limit 10 --format='table(timestamp,protoPayload.resourceName)' --freshness=7d

Note that above audit entry happens because the delete operation needs to get the IAM permission check. You may can play with limit and freshness above to get the desired results. You may get results like below depending on your system activity:

| TIMESTAMP | RESOURCE_NAME |

| 2020-11-25T05:13:23.036134Z | /myproject/datasets/mydataset/tables/table1 |

| 2020-11-24T12:48:37.133127Z | projects/myproject/datasets/mydataset/tables/temp1 |

Note that the timestamps above will be in UTC. We were thrilled to find out table name above and time stamp was accurate with the time stamp provided by the developer, the culprit.(project,dataset and table names are changed to maintain privacy).

Recovery at last!

Now how did we use this to retrieve the table data? Commands follow:

#Get time in seconds

TS=`date '+%s' --date='2020-11-25T05:13:23.036134Z'`

#Convert to milliseconds

TMS=${TS}000

#Get snapshot of table just before the delete

bq cp mydataset.table1@$TMS mydataset.table1copy

Finally every one was happy that data was fully recovered.

Prevention is better than cure

Well, we learnt a lesson here but you don’t really want to get into this situation for the second time. Other than the principle of least privilege, you can remove the data set and table delete privileges from the developers and admins as well in production and add them only when you need to delete a table after undergoing all the approvals. Following IAM permissions are worth consideration:

- bigquery.datasets.delete

- bigquery.tables.delete

You can remove these permissions and add them only when needed. Adding them only takes a few seconds to take effect.

Conclusion:

Disasters happen and one needs to be prepared to handle them with detailed and clear instructions. While you can recover from deletion of a table in BigQuery, the documentation comes short of providing full help. Here I have elaborated how we found a way to come out of such a situation.

About the Author

Ganapathi is a practicing expert in Cloud Computing, Big data, Hadoop, Storm and Spark. He is a Google Certified Cloud Architect. He has been managing database projects for many years and is consulting clients on Big Data and Cloud implementations. He is a Cloudera certified Hadoop administrator and also a Sybase certified database administrator. He has worked with clients in US, UK, Australia, Japan and India on many large projects. He has helped in implementing large database projects in Sybase, Oracle, Informix, DB2, MySQL and recently SAPHANA. He has been using big data technologies like Apache Hadoop and Apache Spark and has been providing strategies for dealing with large databases and performance issues and helping in setting up big data clusters. He also conducts lot of trainings on Big Data and hadoop ecosystem of products like HDFS, mapreduce, hive, pig, Hbase, sqoop, flume, Cassandra, Kafka, Storm and Spark. He is based out of Bangalore, India. He can be reached at ganapathid@spideropsnet.com. He also has online big data courses on Udemy and you can subscribe to these courses at https://www.udemy.com/user/ganapathi-devappa/