By Ganapathi Devappa

When I am discussing with clients about big data solutions, they say the relational databases cannot handle their data and ask me if they can try Cassandra. It is just that they have heard about Cassandra as a fast no-sql database and want to try it out. I have been trying Cassandra for the last two months and finally got some time to write about it.

What is in a name?

What is in the name Cassandra? Cassandra is a very catchy and mysterious name. It came from the Greek character Cassandra who had the power of prophecy but no one believed her. I think the product is also supposed to run very fast and address all your problems but probably you will not believe unless you try it. But the name Cassandra is very catchy, so if some one throws in this name along with many other hadoop projects, you will probably remember Cassandra more than any other names.

What is it?

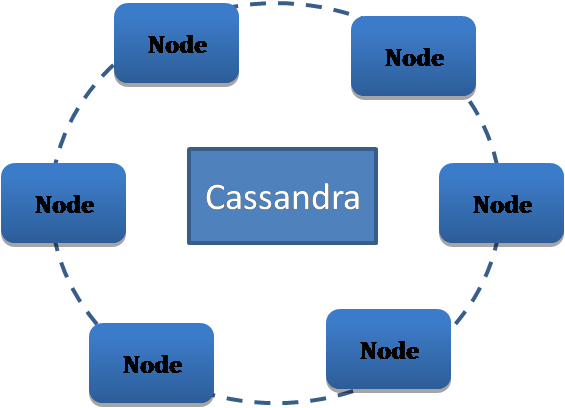

Cassandra is part of Apache hadoop opensource project. Apart from the opensource community, it is also heavily supported by Data Stax. It is a distributed, highly scalable, fault tolerant and high performance No-sql database. It is based on key-value storage and doesn’t run on the map reduce framework. It runs on a fault-tolerant ring based cluster with an ‘all master’ architecture.

What is No-SQL?

Architects and CTOs seem to be fascinated by the term ‘No-SQL’ and when they think they have to handle lot of data in a new project, they first want to consider a No-SQL alternative. They don’t want to settle for the time tested RDBMS which they already know everything about. So first let me explain about the No-SQL term before I delve more into Cassandra.

No-SQL is the umbrella term for databases that don’t follow RDBMS principles, especially the ACID principles (atomicity, Consistency, Isolation and Durability). When data sizes increase beyond Gigabytes to Terabytes and Petabytes, the ACID principles seemed to hold the databases back and this affected their scalability.

Then came about the CAP principle (some erroneously call it CAP theorem, without knowing the theory behind it). CAP refers to Consistency, Availability and Partition tolerance of a distributed database system. Eric Brewer stated that it is not possible to guarantee all three simultaneously in a distributed system. So no-sql databases promise two out of three and compromise on the third to achieve higher scalability.

Cassandra provides tunable consistency. It provides fast writes where writes do not wait for all the copies of the data to be updated before returning success. But it promises eventual consistency so that all the data copies are made to be in sync within a certain time period.

Disclaimer and use cases

If it is so fast and fault tolerant, can it be used for general purpose database or all my big data analytics needs? Note that Cassandra is not a general purpose database and it was built for a particular purpose and to efficiently handle certain limited use cases. It can be used for the following use cases:

- Storage of large amount of data quickly

- Getting data based on keys – single or composite

- Getting data ordered by whole or part of a key

- Getting data using partitions

This will be useful for industry use cases like:

Find all products purchased by an order by amount spent

It is not useful where you want to do aggregation of data based on some keys. In those situations, it is better to get the aggregated and de-normalized data into Cassandra for processing.

The architecture

Cassandra has a ring type cluster with all master architecture. Automatic data distribution happens across all nodes. It does replication of data similar to HDFS. It uses a hash algorithm to distribute rows of data among the nodes in the cluster. Data is kept in memory and written to disk in a lazy fashion. This is similar to many in-memory databases.

Because of this architecture, Cassandra can transparently distribute data to nodes, ie there is no randomness here. Any node can accept any request. If data is on the node, then it will return the data immediately otherwise it will forward the request to other nodes. The number of replicas for each file can be specified by the user and modified any time. Transactions are first written to a commit log on disk and to an image in memory. The consistency levels can be tuned by the user.

Cassandra supports multiple data centers, with each data center being set up as a ring. Within each data center, you can have multiple racks. The data center and rack configuration can be specified in a configuration file called Cassandra.yaml. Remember that HDFS supports only rack level topology and not data centers.

A gossip protocol is used for inter-node communication so that each node knows the status of every other node in the same data center.

Benefits

The benefits Cassandra delivers to your business include:

- Elastic scalability – Allows you to easily add capacity online to accommodate more customers and more data whenever you need.

- Always on architecture – Contains no single point of failure (as with traditional master/slave RDBMS’s and other NoSQL solutions) resulting in continuous availability for business-critical applications that can’t afford to go down, ever.

- Fast linear-scale performance – Enables sub-second response times with linear scalability (double your throughput with two nodes, quadruple it with four, and so on) to deliver response time speeds your customers have come to expect.

- Flexible data storage – Easily accommodates the full range of data formats – structured, semi-structured and unstructured – that run through today’s modern applications. Also dynamically accommodates changes to your data structures as your data needs evolve.

- Easy data distribution – Gives you maximum flexibility to distribute data where you need by replicating data across multiple data centers, the cloud and even mixed cloud/on-premise environments. Read and write to any node with all changes being automatically synchronized across a cluster.

- Operational simplicity – with all nodes in a cluster being the same, there is no complex configuration to manage so administration duties are greatly simplified.

- Transaction support – Delivers the “AID” in ACID compliance through its use of a commit log to capture all writes and built-in redundancies that ensure data durability in the event of hardware failures, as well as transaction isolation, atomicity, with consistency being tunable.

In addition, Cassandra requires no new equipment and is very economical to run. It also provides data compression (up to 80% in some cases), eliminates the hassle of memory caching software (e.g., memcached), and provides a query language (CQL) that’s similar to SQL, flattening the learning curve.

Cassandra Examples

Cassandra does not support full fledged SQL. It provides a command line interface called cqlsh to run Casandra commands. It’s data access language is very limited and supports only getting the data with keys as below:

CREATE KEYSPACE sampledb with strategy_class = ‘SimpleStrategy’ AND strategy_options:replication_factor = 3 ;

Use sampledb;

CREATE TABLE EMP (EmpID int, DeptID int, First_name varchar, Last_name varchar, PRIMARY KEY (empID, deptID));

INSERT INTO EMP (empID, deptID, first_name, last_name)VALUES (104, 15, ‘jane’,’smith’);

SELECT * FROM EMP WHERE empid = 104 and deptid = 15;

SELECT *FROM EMP WHERE empID IN(130,104) ORDER BY deptID DESC;

UPDATE EMP set first_name = ‘Gena’ where empid = 104;

Cassandra allows searches only by the primary key or any indexes created. Searches by other columns will give an error.

Cassandra Datamodel

When it started, Cassandra started with the grand vision of having a column-family based datastore with data model of column families and super-column-families. This got derailed some where in later versions and now it has become a row store only with versioned columns. Instead of a column family based datastore like Hbase, it has become a key-value based data store. So don’t be surprised if you see reference to column families and super column families in old documentation but don’t see it in the language syntax.

Like relational database schema, you can define key spaces in Cassandra to hold a database schema. You can have tables defined within key spaces. Tables have columns that can be single values or complex data types like maps, lists and sets.

Tables must have a primary key which can contain single column or multiple columns (composite primary key). If primary key has multiple columns, then the data is partitioned by the first column and clustered and sorted by the remaining primary key columns for each partition. Data is always partitioned by the first column of the primary key. Tables can have other indexes defined as well so that you can search using those columns.

Getting data into Cassandra

You can write data into Cassandra using insert statements or you can use a tool like Sqoop to get data into Cassandra. Sqoop has special commands to import data directly into Cassandra. Cassandra provides JDBC interface so that you can query the database in Java. Interfaces to other hadoop products like hive and hbase are still being developed and have not become mainstream.

Conclusion

Cassandra is a Key-Value based No-SQL database that has lot of promise in terms of unique ring-based architecture, no single point of failure and fast writes. It is highly scalable and is ready for big data. But its limited query support and lack of joins/aggregates make it not suitable for all big data based applications. It also has the limitation of being supported by only a few vendors who are providing hadoop support. Its success will depend on how quickly the development community can build robust interfaces to other hadoop ecosystem products like Flume, hive and hbase.

About the Author

Ganapathi is a practicing expert in Big data, SAP HANA and Hadoop. He has been managing database projects for many years and is consulting clients on Big Data implementations. He is a Cloudera certified Hadoop administrator and also a Sybase certified database administrator. He has worked with clients in US, UK, Australia, Japan and India on many large projects. He has helped in implementing large database projects in Sybase, Oracle, Informix, DB2, MySQL and recently SAPHANA. He has been using big data technologies like Apache Hadoop and SAP HANA and has been providing strategies for dealing with large databases and performance issues and helping in setting up big data clusters. He also conducts lot of trainings on Big Data and hadoop ecosystem of products like HDFS, mapreduce, hive, pig, Hbase, sqoop, flume, Cassandra and Spark. He is based out of Bangalore, India. He can be reached at ganapathid@spideropsnet.com.